Image-to-video: AI technology for animating static photos and illustrations

The transformative power of image-to-video AI technology

The digital landscape is witnessing a profound shift from static imagery to dynamic motion, driven by one of the most groundbreaking creative technologies today. The technology leverages advanced generative models and neural networks trained on millions of image and video pairs. These sophisticated systems learn to understand and predict realistic motion, physics, lighting transitions, and human expressions.

When presented with a static image, the AI analyses the subject's pose, perspective, and context to generate a natural and coherent animation sequence. The result can range from a subtle, cinematic camera pan across a landscape to a portrait where the subject blinks or offers a slight, lifelike smile. The capability to instill personality and emotion into still frames is what sets contemporary tools apart from earlier, more rigid forms of automation.

How modern AI animates static visuals

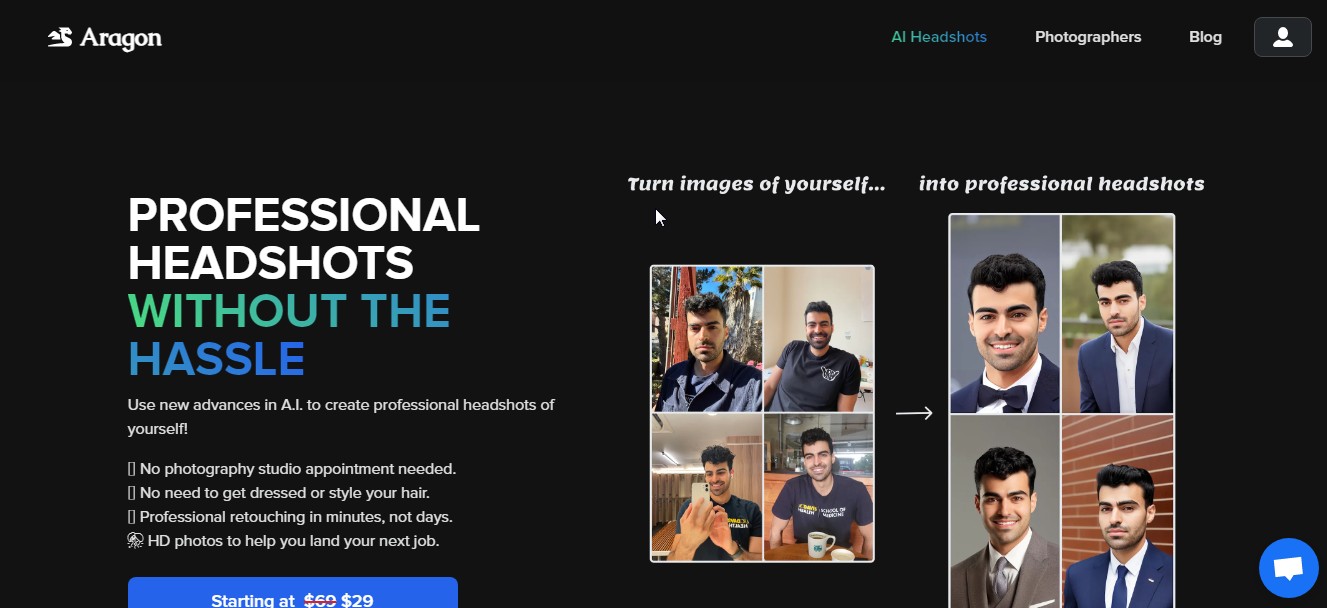

The process behind converting a still image into a video is both complex and elegantly simple for the end user. Leading platforms have streamlined creation into an accessible workflow that often requires just three steps. A creator begins by uploading a high-quality image in a common format like JPEG or PNG. The next stage involves customization, where the user can select from various motion templates, define the desired action through text prompts, or adjust parameters like duration and aspect ratio. With a click to generate, the AI model interprets these inputs, renders the motion, and produces a polished video clip ready for download and sharing.

Different tools specialize in distinct types of animation, offering creators a spectrum of creative possibilities. Some models are engineered for cinematic, narrative-led motion. Google's Veo, for instance, is noted for generating clips with smooth camera movements that mimic professional dolly shots, realistic ambient lighting shifts, and a convincing sense of depth that makes two-dimensional photos feel three-dimensional. Its ability to handle emotional nuance and dialogue makes it a powerful tool for filmmakers and storytellers. Conversely, platforms like TikTok's AI Alive are finely tuned for the demands of social media, specializing in expressive micro-gestures and facial animations that sync with audio to drive higher engagement and click-through rates in short-form feeds.

The underlying technology continues to evolve rapidly, with new features pushing creative boundaries. Advanced systems now offer object insertion and removal, dynamic prompt-based editing, and even integrated AI voiceovers with accurate lip-sync. This allows for an unprecedented level of control where creators can fine-tune gestures, change camera angles, or alter the weather in a scene long after the original image was captured. The progression from basic animation to directed, emotive video generation marks a significant milestone, transforming AI from a simple effects tool into a collaborative creative director.

AI Catalog's chief editor

.jpg)