Copyright issues and legal concerns in AI-generated images

When AI images risk infringing others

AI generated images infringe existing copyrights and trademarks. Training data for popular models is built from billions of images scraped online, including many copyrighted works taken without explicit consent, which fuels concern from photographers, illustrators and studios about unlicensed use.

When a prompt pushes a model to closely imitate a recognisable character, franchise aesthetic, or proprietary style, the output can reproduce protected elements in ways that courts may eventually treat as infringement. Law firms now warn that “Ghibli style” or “in the style of [living artist]” prompts increased risk, especially if the resulting image competes in the same market as the original creator’s work.

Trademark issues also surface if logos, branded packaging, or mascots appear in generated scenes, since trademark law focuses on consumer confusion rather than pure creativity. Users who assume that AI tools automatically filter these problems sometimes discover that the legal liability for publication still sits with them.

Human contribution and hybrid protection

Some creators try to secure limited protection by adding substantial human input on top of AI outputs. Guidance from the Copyright Office allows registration of human authored aspects in hybrid works, such as complex compositing, detailed retouching, or original layout and sequencing built around AI generated elements. In these cases, copyright covers the specific selection and arrangement choices made by the human editor, not the underlying AI fragments themselves. The line is fact specific and demands honesty when registering works.

Applicants must disclose the presence of more than de minimis AI content and “disclaim” it, claiming only the portions they actually created. Failure to do so can lead to cancelled registrations or weakened enforcement in court. Artists who iterate heavily on AI drafts in tools such as Photoshop, painting over, re lighting, and re composing scenes, stand on firmer ground than those who simply download a raw output and upload it as finished art.

Practical risk management for AI visuals

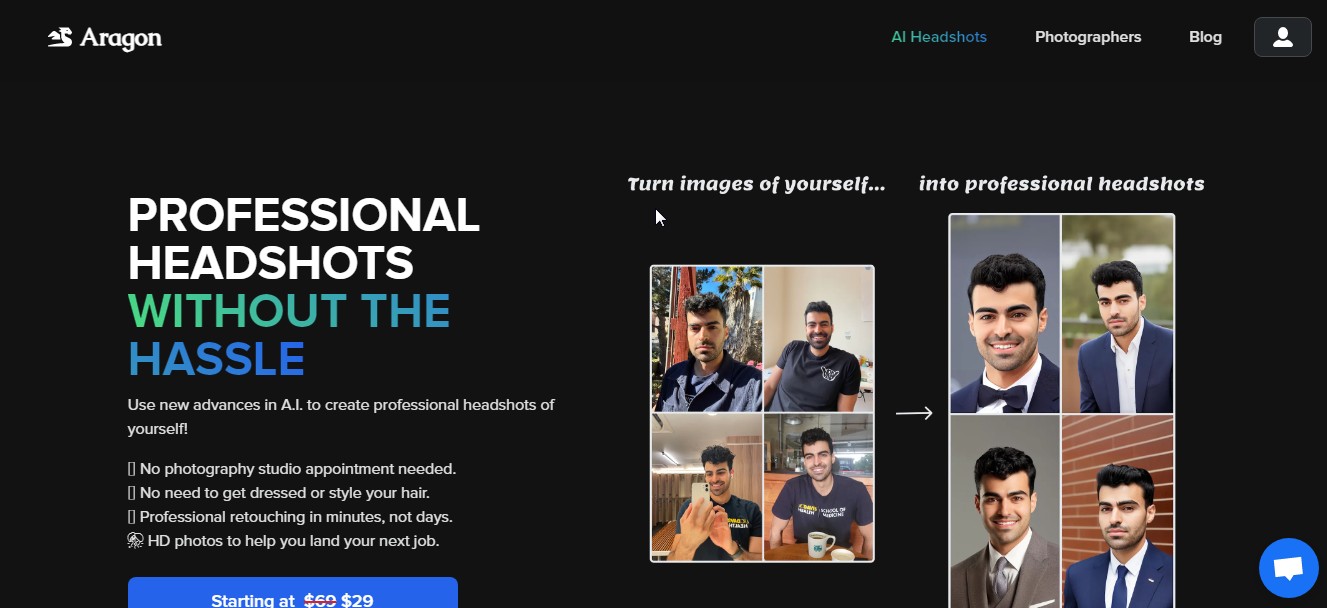

Navigating this landscape requires more than blind trust in “AI safe” labels. Lawyers recommend avoiding prompts that name living artists, specific film studios, or trademarked characters, and instead focusing on descriptive language that conveys mood, lighting, composition and era without direct stylistic copying. Building internal prompt libraries that steer clear of obvious IP references reduces the chance that outputs will closely mirror someone else’s catalogue.

Creators who rely on AI images in products can also layer protection by combining multiple sources, heavy manual editing and original photography or illustration elements. Keeping AI outputs as backgrounds, textures or inspiration, while foreground elements are clearly human made, reduces dependence on a legally ambiguous base. For high stakes uses such as book covers, packaging or flagship ad campaigns, commissioning bespoke work or negotiating licences with rights holders remains the safest route.

AI Catalog's chief editor

.jpg)